Data Handling

The main purpose of NIF is to produce high- quality experimental data that is used to validate theoretical physics. NIF software tools have been designed and constructed to manage and integrate data from multiple sources, including machine state configurations and calibrations, experimental shot data, and pre-and post-shot simulations. During a shot, data from each diagnostic is automatically captured and archived in a database. Arrival of new data triggers the shot analysis, visualization, and infrastructure (SAVI) engine, the first automated analysis system of its kind, which distributes the signal and image processing tasks to a Linux cluster and launches an analysis for each diagnostic. Results are archived in NIF’s data repository for experimentalist approval and display using a web-based tool. A key feature is that scientists can review data results remotely or locally, download results, and perform and upload their own analysis.

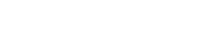

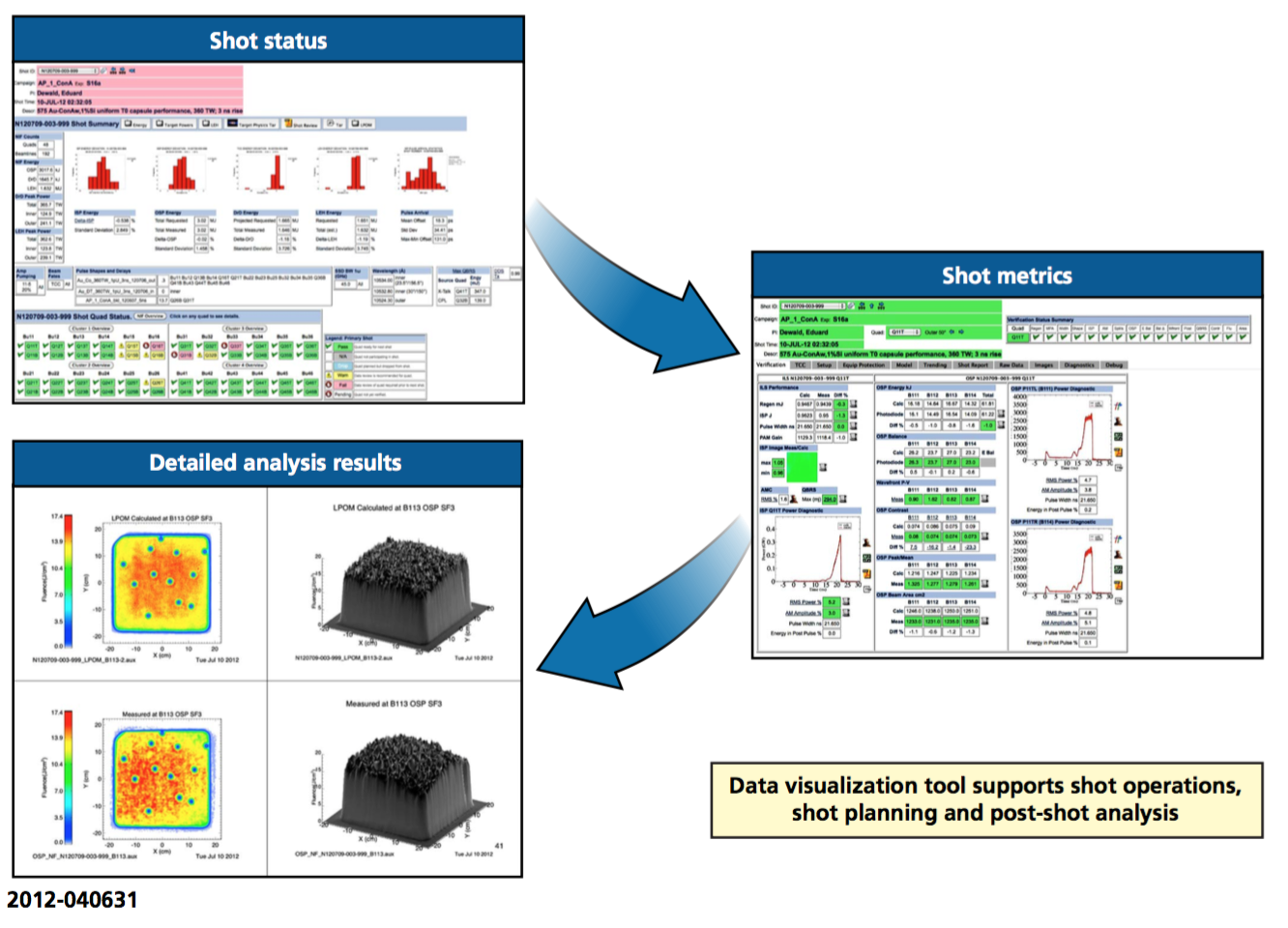

Post-shot data analysis and laser performance reporting is provided by the Laser Performance Operations Model (LPOM). To do this, LPOM is directly linked to the Integrated Computer Control System (ICCS) shot database and upon request, it can quickly (within minutes) provide the NIF shot director and user with a report that compares predicted and measured results, summarizing how well the shot met the requested goals of the shot. In addition, the LPOM data reporting system can access and display near-field and far-field images taken on each of the laser diagnostic locations and provide comparisons with predicted images. The results of the post-shot analysis are displayed on the LPOM graphical user interface (see Figure 8-1), while a subset of the analysis is presented to the shot director through a shot supervisor interface.

Figure 8-1. After each shot, users can examine the detailed laser performance for any beamline using a suite of data trending and analysis tools.

Experimental data, plus data on the post- shot state of the facility, are housed in and retrieved (via the Archive Viewer) from the NIF data repository. This secure archive stores all the relevant experiment information, including target images, diagnostic data, and facility equipment inspections, for 30 years, using a combination of high-performance databases and archival tapes. Retaining the data allows researchers to retroactively analyze and interpret results or perhaps to build on experimental data originally produced by other scientists. A crucial design feature of the database is that it preserves the pedigree of the data—all the related pieces of information from a particular experiment, such as algorithms, equipment calibrations, configurations, images, and raw and processed data—and thus provides a long-term record of all the linked, versioned shot data.